Best Practices Guide for Local Prototyping of Serverless Applications

Local prototyping has become de rigueur for most web stack developers in the last few years. Even complex web backends are generally assumed to be emulatable from a developer’s laptop.

But this assumption breaks down a bit as we explore the AWS platform in general. More specifically, serverless architecture challenges the system of local prototyping.

The guide below covers some of the known issues for local prototyping with serverless applications, general principles for getting started, and some best practices to achieve a stable local development process.

Known issues with serverless prototyping

There is no perfect local analog for serverless execution environments. When first setting up a serverless function via AWS Lambda, many developers will start by pasting code into the AWS console and waiting several minutes for that code to become available. This is reasonable only so long as Lambdas remain very, very simple.

If you have a complex Lambda function that handles many types of requests (a strategy known as "Fat Lambda"), the tactic of entering code and waiting for it to deploy will break down. The deploy time for Lambda functions is a few minutes, and most developers are used to detecting errors in their code by running it and seeing it fail.

Changing the time to run code from a few seconds to several minutes means an exponential slowdown in the pace of code development.

General goals for local prototyping

##### 1) A fast local development ‘loop’

In general, the longer the time between writing code and seeing it run, the slower features will be released and more numerous the eventually discovered bugs. We want to enable developers to build tools quickly, so we want to preserve a fast "development loop" where coders can see the problems in their code as quickly as possible after writing it.

##### 1) A fast local development ‘loop’

In general, the longer the time between writing code and seeing it run, the slower features will be released and more numerous the eventually discovered bugs. We want to enable developers to build tools quickly, so we want to preserve a fast "development loop" where coders can see the problems in their code as quickly as possible after writing it.

##### 2) Preservation of infrastructure as code

Any solution we use should preserve the ‘infrastructure as code’ goal that underpins any professional serverless strategy. [AWS CloudFormation](https://aws.amazon.com/cloudformation/) should be used to template application environments (‘stacks’) including API gateway/access points, functions, and data sources.

##### 2) Preservation of infrastructure as code

Any solution we use should preserve the ‘infrastructure as code’ goal that underpins any professional serverless strategy. [AWS CloudFormation](https://aws.amazon.com/cloudformation/) should be used to template application environments (‘stacks’) including API gateway/access points, functions, and data sources.

This rules out de facto solutions like running our code in some other environment for testing, e.g. grabbing our Node Lambda code and exercising it as part of a Node app locally. It would verify that our code at least parses correctly, but would require many many in-place mock systems to simulate the other parts of our stack. Stick with the IaC methodology.

##### 3) Isolation of environments — sandboxing

When working on our local version we know that nothing is going to mess up our production application. This is another advantage of local prototyping beyond speeding up the development loop. For instance, testing account-creation won’t mess up the production database.

##### 3) Isolation of environments — sandboxing

When working on our local version we know that nothing is going to mess up our production application. This is another advantage of local prototyping beyond speeding up the development loop. For instance, testing account-creation won’t mess up the production database.

Whatever solution we come up with has to preserve this ‘sandbox’ advantage. Ideally, we’d like every developer to have their own sandbox but in the very least, they should be able to share one with no possible production side-effects.

Don’t forget that serverless should empower developers to change their resource configuration. Therefore, isolating environments when doing local prototyping is key to maintaining that empowerment and control!

Don’t forget that serverless should empower developers to change their resource configuration. Therefore, isolating environments when doing local prototyping is key to maintaining that empowerment and control!

Running serverless functions locally with the SAM CLI

Aware of these problems, a team at AWS released the Serverless Application Model (SAM) CLI in 2017. This tool adds a significant level of functionality for local prototyping.

Implementation

The SAM CLI uses Docker to create an execution environment similar to what your production Lambdas will run in. Critically, it measures the execution time and exit state for your Lambda functions, helping you identify issues that may cause them to run for an excessive amount of time. Of course, the primary use case here is error-identification in your code.

Limitations

The SAM CLI’s local invoke tools cannot simulate scaling issues, so certain problems (like handling spiky traffic) should be tested with options like canary deployments on production. An excellent guide for canary deploys with Lambdas by Yan Cui is available from Lumigo.

Another issue that will affect almost all production environments is that the SAM CLI can only simulate some components of an application locally.

For example, when trying to invoke a Lambda function that relies on an API Gateway and an AWS Aurora database, the SAM CLI can run the Lambda and API without issue, but it can’t run the database within the local Docker environment.

Letting local Lambda functions access AWS resources

In order to really run your Lambdas in a way that reflects how they’ll use a full application stack, you’ll need to manage a few hurdles:

-

Assuming IAM roles to give your lambda permission to access other resources

-

Managing environment variables in a group

- There may be multiple resources that are addressed through Lambda Function environment variables, so you’ll need a tool or system to swap in the environment variable values from a real deployment when the Lambda is invoked from a local machine.

-

SSH Tunneling to resources within a VPC

Environments

While a carefully documented set of migrations can give a user a local copy of a development database, there are often components that either don’t have good local analogs or simply aren’t convenient to create as local copies. Within the AWS sphere, some services are not currently available to accurately run locally. We could create a local SQL database instead of the Aurora serverless database, but couldn’t guarantee that it would have a similar execution environment.

The solution to this issue is to replicate as many resources as possible into “environments” where it’s safe to experiment. We can’t locally emulate Aurora DBs, but we can create real aurora DBs and interact with them from our local function invocation.

The Stackery CLI

The Stackery CLI goes one step further, extending the SAM CLI to allow your locally running Lambda function to communicate with cloud resources like databases. This allows you to iterate on code very quickly in your local environment-- even if its function relies on AWS resources that can’t easily be emulated. Essentially, the problems above are resolved automatically with the Stackery CLI.

Using the Stackery CLI to invoke functions locally

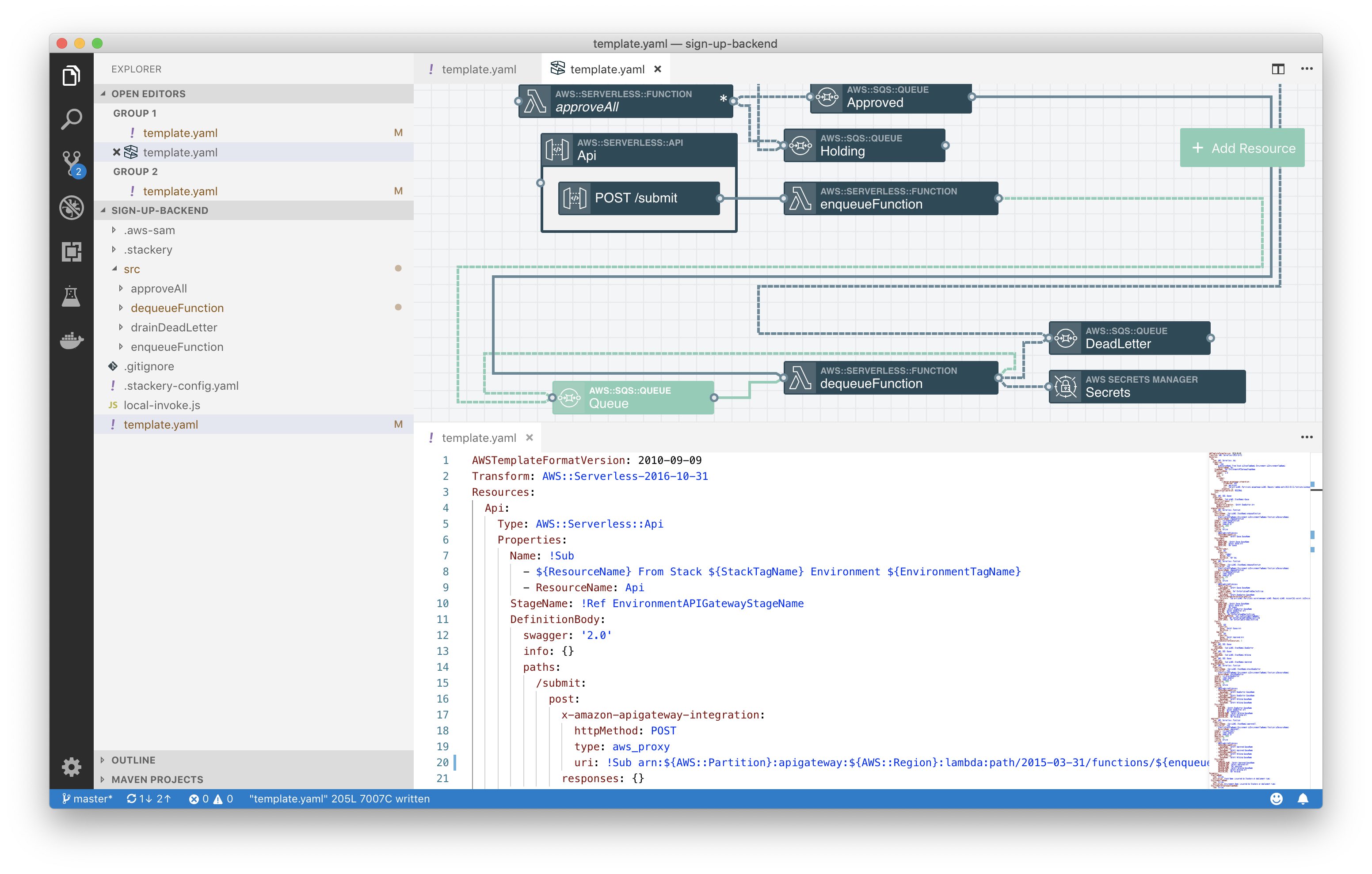

The process with the Stackery CLI starts with a CloudFormation template and a code repository for your Lambdas. You can use Stackery to create your CloudFormation template, as described in this detailed guide to the CLI and local invocation. The steps to get ready for local invocation are:

- Create a CloudFormation template

- Add needed environment variables and IAM permissions for resources to your Lambda Function (Using Stackery to wire a Function to other resources makes this easy)

- Write initial function code (Stackery’s default boilerplate works great)

- Deploy your application to AWS

Once you’ve created a template and have deployed it to AWS, you can invoke a local copy of your lambda code with

stackery local invoke -e test --aws-profile <your aws profile name>

The -e flag is pointing to the environment name, which gathers all the environment variables for all your Lambda functions and assumes its IAM Role.

Stackery can manage the IAM permissions automatically using a linked AWS account.

Build settings

If you're using a compiled or transpiled language such as Go or Typescript, you'll need to add the --build flag to the local invoke command. This builds the function before invoking it.

Debugging using VS Code

Setting up debugging in VS Code You can use Visual Studio Code to debug your Lambda. To use VS Code's built-in debugger, add the following to your launch config file:

{

"name": "Attach to SAM CLI",

"type": "node",

"request": "attach",

"address": "localhost",

"port": 5656, // set the port to whatever you would like, but it has to match the port in the --debug-port flag

"localRoot": "${workspaceRoot}/src/getEvent",

"remoteRoot": "/var/task",

"protocol": "inspector",

"stopOnEntry": true

}

Then you can run:

stackery local invoke --stack-name local-demo --env-name test --function-id getEvent --debug-port 5656

Read the AWS docs on Step-Through Debugging Lambda Functions Locally for more information about configuring local debugging.

To get more detail or use debugging with another IDE, see the Stackery documentation on debugging locally.

You can also tour Stackery's VSCode plugin on their marketplace and start using it to edit CloudFormation architecture, debug/develop of any Lambda function locally, and more.

Further reading

Don't miss these great resources if you’re excited to do local prototyping with serverless:

Related posts