The Economics of Serverless for IoT

It's no surprise that the rise of connected devices and the Internet of Things is coinciding with the movement toward Functions-as-a-Service and serverless computing. Serverless, and its near-cousin "edge computing," are both paradigms of pairing compute with event triggers and IoT opens the door for a whole new breed of event triggers.

In the classic model of the internet (as an aside: have we reached the point where there is now a "classic" internet?), resources were delivered to a user upon the event of a user request. These predominantly static resources were slowly replaced by dynamically computed resources, and over time user requests were augmented with APIs and similiar machine-generated requests. For decades, though, this model was fundamentally simple and worked well. Importantly, because it was driven primarily by human requests it had the advantage of being reasonably predictable, and that made managing costs an achievable human task.

At small scale, a service could simply be deployed on a single machine. While not neccessarily efficient, the cost of a single server has (at least in recent years) become very accessible and efficiency at this scale is not typically of paramount importance. Larger deployments required more complex infrastructure - typically in the form of a load balancer distributing traffic across a pool of compute resources. Still, this predominantly human-requested compute load followed certain patterns. There are predictable peak traffic times, and equally predictable troughs, in human-centric events.

Prior in my career, I led New Relic's Browser performancing monitoring product. We monitored billions of page loads each day across tens of thousands of sites. At that scale, traffic becomes increasingly predictable. Small fluctuations of any given application are washed out in the aggregate of the world's internet traffic. With enough scale, infrastructure planning becomes fairly straightforward -- there are no spikes at scale, only gently rolling curves.

In the human-centric event paradigm, events are triggered upon human request. While any individual person may be difficult to predict (or maybe not), in aggregate, people are predictable. They typically sleep at night. Most work during the day. Many watch the Superbowl and most that don't watch the World Cup. You get the idea.

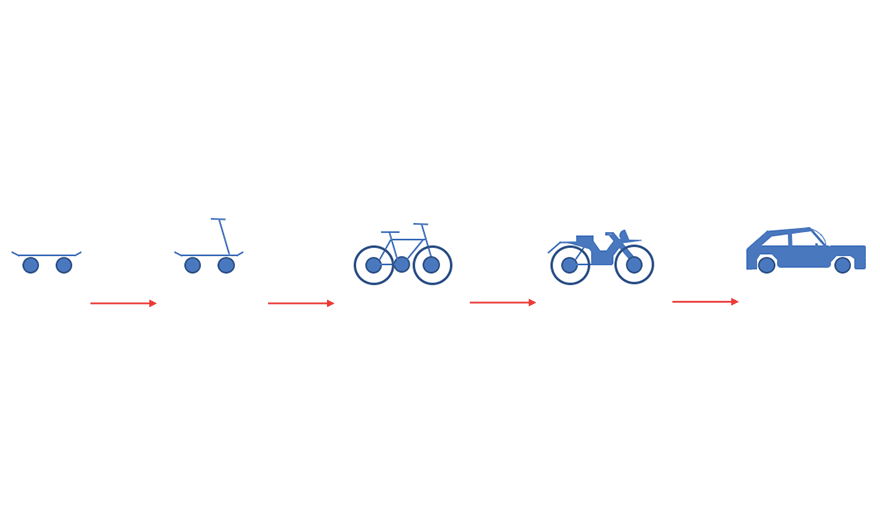

However a major shift is underway, driven by the rapidly growing number of internet-connected devices generating a proliferation of new event-triggers that do not follow human patterns.

The new breed of events

The Internet of Things is still in its infancy, but it's not hard to see where this trajectory leads. At the very least, it has the potential to increase internet traffic by an order of magnitude, but that's potentially the easy part in comparison to how it will change events.

While the old event model was human-centric, the new model is device-centric -- and the behavior of these devices, the requests they make will in many cases be triggered by events the device senses in its environment. And this is the heart of the problem: the environment is exponentially more unpredictable than people. The old infrastructure model is a poor fit for the dynamicism of the events that IoT gives rise to.

If you need an example of how unpredictable environment-centric events are, just think about airline flight delays. In an industry with razor-thin margins dependent on efficient utilization of multi-hundred-million dollar capital assets, I doubt you'll find a more motivated group of forecasters. KLM currently ranks best globally for on-time arrivals, and they get it wrong 11.5% of the time. (At the bottom of the rankings are Hainan Airlines, Korean Air, and Air China with a greater than 67% delay rate.) Sure, people play a part in this unpredictability, but weather, natural disasters, government intervention, software glitches, and the complexity of the global airline network all conflate the problem. Predicting environmental events is very, very challenging.

If the best of the best are unable to hit 90% accuracy, how can we ever achieve meaningful infrastructure utilization rates under the current model?

Serverless Economics

One of the underlooked advantages of serverless computing, as offered by AWS Lambda and Azure Functions, is the power of aggregation. Remember, there are no spikes at scale. And it's hard to envision a greater scale in the immediate future, than that of aggregating the world's compute into public FaaS cloud offerings. By taking your individual unpredictability alongside that of all of their other customers, AWS and Azure are able to hedge away much of the environmental-event risk. In doing so, they enable customers to run very highly utilized infrastructure, and this will enable dramatic growth and efficiency for the vastly less predictable infrastructure needs of IoT.

What's more, these public clouds are able to provide not just predictable performance and delivery for connected device manufacturers, but they're able to provide predictable costs through their pay-per-use model. Why is that? It's all about timing.

If you're running servers, the capacity you require is largely dependent on when the traffic hits. In order to handle large spikes, you might bulk up your capacity and thus run at a relatively lower utilization rate -- paying for unused capacity over time. This is a reasonable way to decrease failure risk, but it's an expensive solution.

But serverless infrastructure is time-independent. You pay the same fractional rate per transaction whether all of your traffic hits at once or is perfectly smooth and consistent over time, and this price predictability will accellerate IoT adoption.

A quick refresher on Economics 101: price is determined by supply and demand, but economics of scale drive cost down with increases in volume. Since connected device manufacturers are motivated by profit (the difference between price and cost), they're incentivized to increase volume (and lower their unit-costs) while filling the market demand. And this is where things get interesting.

A big component of the cost of a connected device is supporting the service (by operating the infrastructure) over the device's lifespan. In the traditional model, those costs were very difficult to predict, so you'd probably want to estimate conservatively. This would raise the cost, which would potentially raise the device price, which would presumably decrease the demand. As you can see, this is a vicious cycle. Because now with less demand you lose economies of scale which further raises the price, and so on and so forth.

Serverless' price predictability transforms this into a virtuous cycle of lower (and predictable) costs, which allows for lower prices, which can then increase demand. And, to close the loop, this increase in IoT success paves the way for more serverless usage and better predictability from the increased aggregate traffic volume. Win-win-win.

Related posts