How to do Serverless Local Development

The Serverless Dev Workflow Challenge

One of the biggest challenges when adopting serverless today is mastering the developer workflow. "How do I develop serverless locally?", "How should I test?", "Should I mock AWS services?". These are common serverless questions, and the answers out there have been unsatisfying. Until now.

The Challenges of the Serverless Development Workflow

There are a few reasons that developer workflow is a common pain point and source of failure for serverless developers:

- Serverless architecture relies primarily on Cloudside managed services which cannot be run on a development laptop (for example, DynamoDB).

- Since infrastructure dependencies can't be run locally, creating a consistent local development environment is challenging.

- As a result, serverless developers are frequently forced to resort to suboptimal workflows where they must deploy each code change to a cloudside environment in order to execute it.

- Deploying each code change forces developers to spend time waiting, which is bad for developer productivity and happiness.

"Getting a smooth developer workflow is a challenge with serverless," said iRobot's Richard Boyd. "There are some people who think we should mock the entire cloud on our local machine and others who want to develop in the real production environment. Finding the right balance between these two is a very hard problem that I’m not smart enough to solve.”

The Developer's Inner Loop

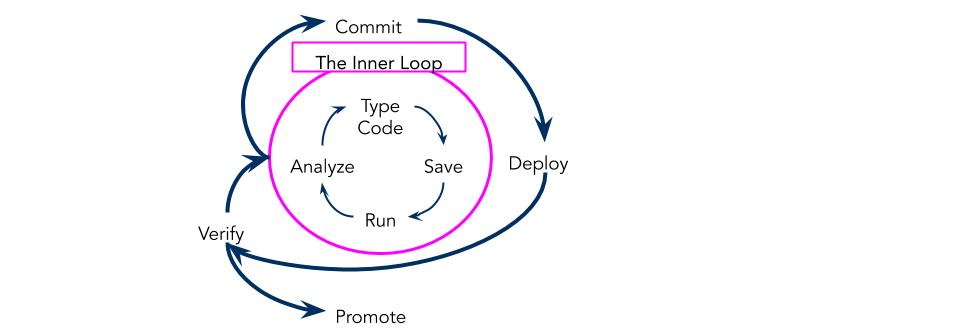

Developers spend the bulk of their coding time following a workflow that looks something like this:

- Change the code

- Run the code

- Analyze the output of running the code

- Rinse and Repeat

In a traditional local development environment, this loop can be completed in 3-10 seconds, meaning a developer can make and test many code changes in a minute. Today many serverless developers are forced into a slow inner loop which takes several minutes to complete. When this happens developers spend most of their time waiting for new versions of their code to deploy to the cloud where it can be run and analyzed (before making the next change). This cuts down on the number of code changes a developer can make in a day and results in context switching and frustration.

The Outer Loop

The outer loop changes in service configurations and application architectures occur less rapidly--- maybe a few times a day for apps under active development. This is an area where Stackery has a long history in helping developers express, deploy, and manage the pipeline of promoting applications to production.

Common Suboptimal Inner Loop Approaches to Serverless Local Development

Serverless architecture is still relatively new so best practices are evolving and not widely known. However, there are a few common approaches to the serverless developer workflow which we know results in suboptimal developer workflows and productivity.

Suboptimal Approach #1: Push each change to the cloud

One common approach is to avoid developing locally and deploy each change to the cloud to run in the cloudside environment. The primary disadvantage of this is that developers must wait for the deploy process to complete for each minor code change. In some cases, these minor code change deploy times can be made faster, but only to a point and often at the cost of consistency. For example, Serverless Framework's sls deploy -f quickly packages and deploys an individual function.

While this does improve the inner loop cycle time it is still significantly slower than executing in a local environment and creates inconsistency in the cloudside environment. This makes it more likely problems will emerge in upstream environments.

Suboptimal Approach #2: Mock cloud services locally

Another common approach is to create mock versions of the cloud services to be used in the local environment. Many developers figure out ways to mock AWS service calls to speed up their dev cycles and there are even projects such as localstack which attempt to provide a fully functional local AWS cloud environment. While this approach may initially appear attractive it comes with a number of significant downsides. Most significantly, fully replicating AWS' rapidly evolving suite of cloud services is a monumental task.

Inevitably there will be inconsistencies between the local mocks and real cloudside services, especially for recently added features. With this approach, the local environment is not consistent with the cloudside environment, and somewhat cruelly, any bugs resulting from these inconsistencies will still need to be debugged and resolved cloudside which means more time spent following Suboptimal Approach #1.

The Optimal Local Serverless Development Workflow

Pull Cloudside Services to Your Local Code

There is a best practice today for creating a serverless dev environment. The benefits of this approach are:

- A tight inner loop (3-7 seconds)

- High consistency with the cloudside environment

- No need to maintain localhost mocks of cloudside services

Following this approach, you will execute local versions of your function code in the context of a deployed cloudside environment. A key component of this approach is to query the environment and IAM configuration directly from your cloudside development environment. This avoids the need for keeping a local copy of environment variables and other forms of bookkeeping needed to integrate with cloudside services.

The result? Consistency between how you code behaves locally and the cloudside environment. For example, one common debugging headache results from different service to service permissions and configurations between local and cloudside resources. This setup cuts off that entire class of problems. The bottom line? Fast local development cycles, reduced debugging friction, and the ability to ship new functionality faster as a result.

This approach sets you up to rapidly iterate on code locally, deploying to your dev environment only when they need to make environment configuration changes or they have made significant progress on function code. Once the code is ready to PR from the development environment it can be deployed to other environments (test, staging, prod) via CI/CD automation or manually triggered commands.

Setting Up Cloudlocal Serverless Development

We've been iterating on serverless code for a few years now at Stackery, sharpening the saw, and honing in on a prescriptive workflow to optimize our development workflow. Several of us nosed our way into the cloudlocal approach. As we shared our experience with other pioneering serverless engineers we found a growing consensus that this was the "right way to serverless" and the productivity and flexibility it unlocked was game-changing.

stackery local invoke unlocks a more efficient and logical workflow for serverless projects using AWS Lambda. If you've spent any time in serverless development, you know how overdue and crucial this is.

Be sure to check out our doc on Cloudlocal Development, too!

Related posts

And how Stackery can help you put it into practice