Running Lambdas on your Laptop

## An FAQ on SAM Local with the SAM CLI

## An FAQ on SAM Local with the SAM CLI

Serverless has the potential to bring massive ops advantages to projects of all sizes, but while it presents great business benefits, we need to spare a thought for how teams develop on serverless.

I recently published ‘Serverless Development is Broken’ a list of concerns about how developers can work with long deploy times inherent in a cloud-only code environment. A frequent response I’ve received since is ‘Why not use the SAM CLI tools to do local serverless?’ Here is my response to that question in the form of a dive into how the SAM CLI and how SAM local can indeed improve your development process of Lambdas, but require more to take advantage of the entire menu of AWS serverless...

Who is this Sam fellow and what does he have to do with AWS?

SAM is not me shouting at Sam Goldstein. It stands for Serverless Application Model, the open-source for describing serverless applications’ resources (that is, the functions, the resources that support those functions, and how they’re connected) used by AWS CloudFront.

What does SAM Local let me do?

SAM Local, a component of the SAM CLI tools, lets you run your Lambdas and API gateways locally, giving you a local URL to send requests to your Lambdas and inspect the responses.

How does SAM CLI run a local version of a Lambda? Don’t we need servers for that?

Your Lambda is run locally in a docker container whose setup is based on the specs in your CloudFormation template.

What do I need to do to run my Lambda code via the SAM CLI?

You need a local copy of your code and a SAM template file that describes your stack.

Writing a SAM template for CloudFormation template is… extremely difficult?

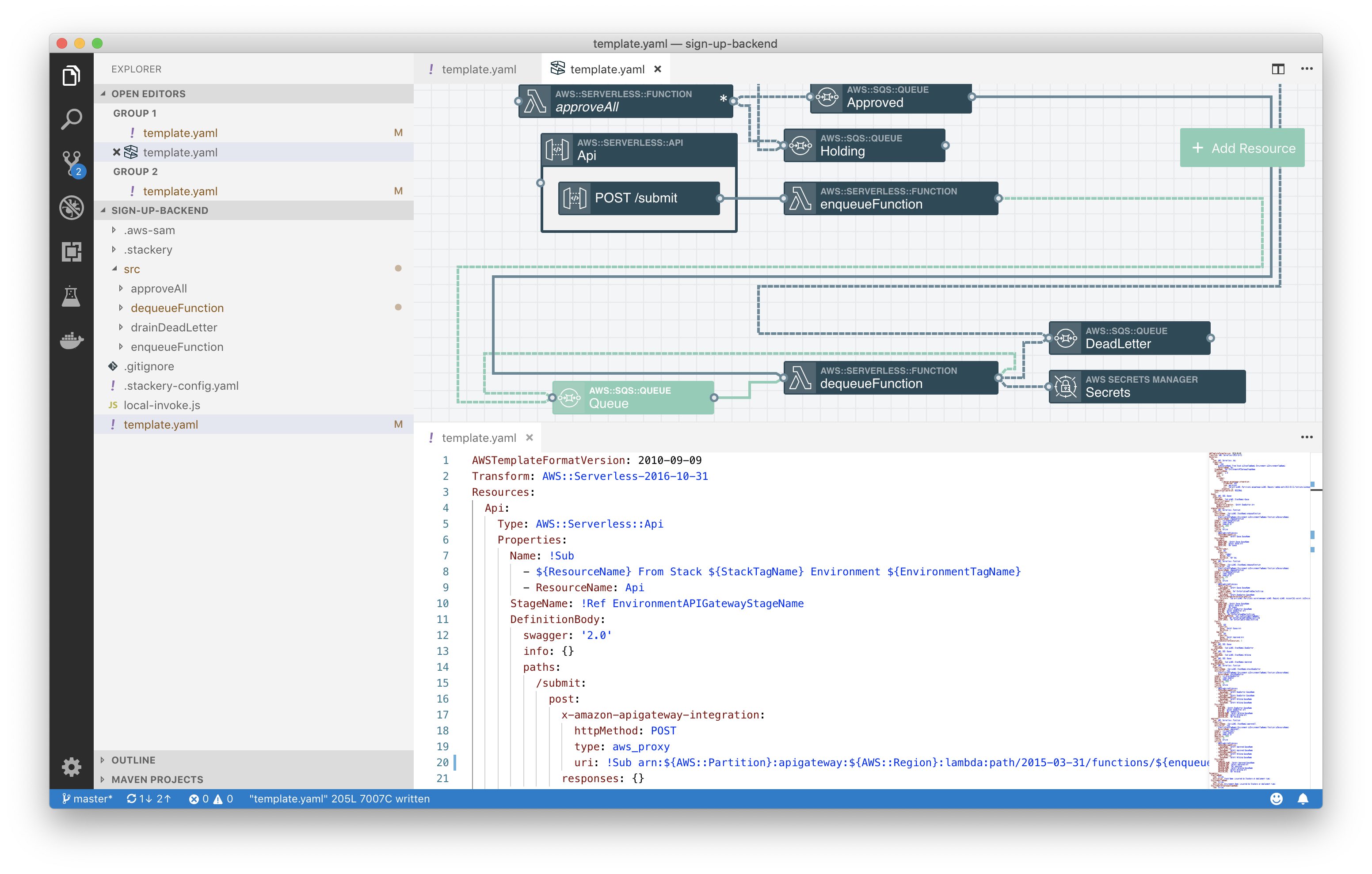

That’s not a question, but I forgive you. There are a number of tools to construct templates based on a graphical canvas. The best is Stackery, which lets you build complex stacks and export a nice, portable template file.

Why would I want to run Lambdas locally?

By now we at Stackery have written extensively about the frustrations of developing serverless code. Essentially, without local tools, you have to wait a few minutes to see your code changes take effect. This isn’t really a problem when you’re releasing code to production but it’s a serious issue for developing code. Without a way to run your code locally, development slows way down.

So SAM Local solves the problem?

It solves the problem for developing and debugging the internals of your Lambdas, yes. If you have an error in your code that would keep your Lambdas from executing, you can find it with SAM Local.

However, if the error that’s going to mess up your Lambda is a problem with how you request data from another service (like, say, a database or another Lambda), you won’t be aware of it until you move your code into the cloud, because SAM CLI has no way to emulate those other services nor can it send requests to the cloud-hosted versions.

Let’s put it another way: if I was writing a Lambda to access a database, there are three kinds of problems I’d expect to see with my Lambda code:

- My code has errors that prevent it from parsing or raise errors

- When I request data from the DB, the request isn’t formatted correctly

- After getting the requested data back, the Lambda hits errors trying to parse it (e.g. it raises errors inappropriately when some fields are blank)

Of these three categories only the first type can be found and fixed before the Lambda is connected to a database, and problems in category 3) are more likely to be found when working with production data.

How does Stackery Cloudlocal help?

Stackery provides a crucial gateway to let you connect your SAM CLI-hosted local Lambda with your cloud resources. This means you can run, debug, and re-write your code quickly, at the speed of local development. And those Lambdas can still talk to cloud-hosted resources.

What about Lambdas talking to other Lambdas?

Stackery Cloudlocal lets your locally-run Lambda talk to any AWS-hosted resource, so your local Lambda can talk to other Lambdas hosted within the cloud.

Okay I’m ready to go!

Again, not a question, but if you haven’t already, create a free Stackery account and get started creating your serverless apps. You can use the CloudFormation SAM templates that Stackery generates to run with SAM CLI, or use our CloudLocal tools to run your Lambda locally and connect it to cloud resources.

Related posts