Serverless is Awesome For APIs

The biggest usage of serverless technologies today is around event-driven workflows in the backend of a system. Examples include receiving an event from an S3 bucket when a video is uploaded, then using a serverless function to spin up a Docker container to transcode the video. However, serverless technologies can provide even bigger wins in when they are used for API services.

The biggest usage of serverless technologies today is around event-driven workflows in the backend of a system. Examples include receiving an event from an S3 bucket when a video is uploaded, then using a serverless function to spin up a Docker container to transcode the video. However, serverless technologies can provide even bigger wins in when they are used for API services.

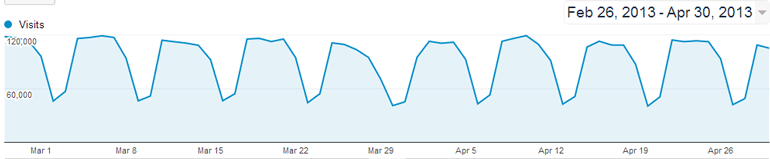

One of the biggest challenges faced when hosting an API service is the volatility of traffic. Here's an example of traffic patterns for a typical API service:

Notice how the traffic goes up and down every day in relatively large amounts. Before serverless there were two technologies to help handle increased traffic loads: request queuing and auto-scaling of compute resources.

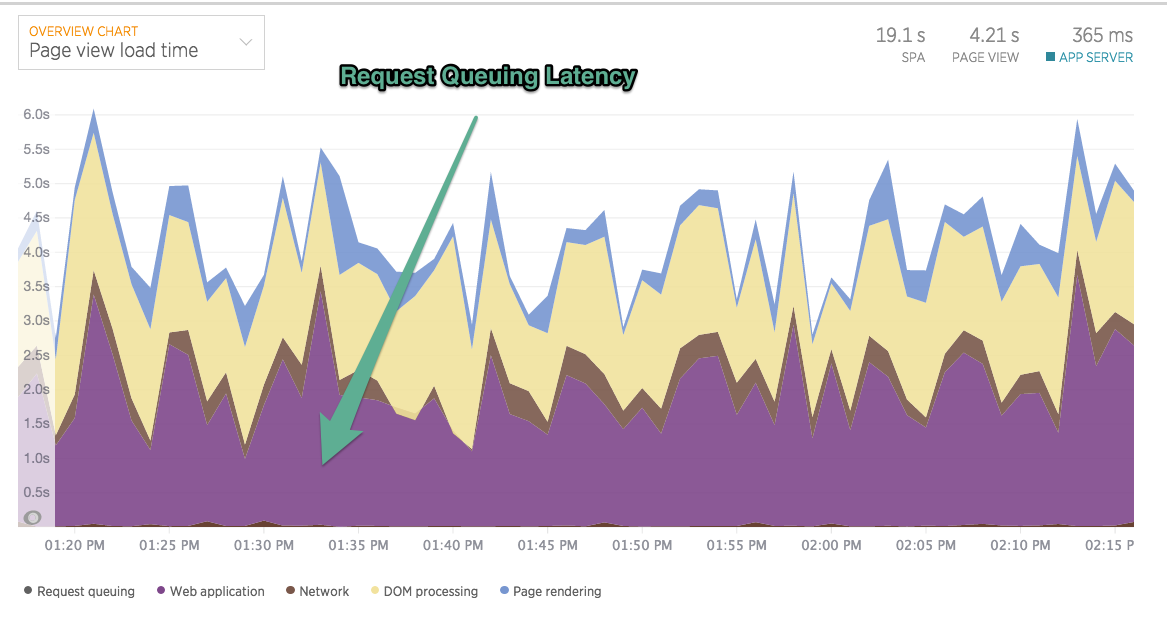

Request Queuing

A request queue is a layer that sits between your load balancer and your application. When many requests come to the server in parallel faster than the application can handle them, the queue will hold some of the requests until the application can handle them. But this adds latency to requests:

Auto-Scaling

If your request load varies too much, it may require auto-scaling of compute resources. While this works, there are two more challenges in using this approach. First, you need to hire or contract with expensive engineers to convert existing services to be auto-scalable. Second, if you have very high traffic volatility, where large spikes occur at random times, auto-scaling often does not occur quickly enough to handle all the traffic.

Serverless

The solution to these problems is the horizontal scalability of serverless technologies:

- Using an API gateway offloads the complexity of request queuing management to the cloud service provider, which also can horizontally provision the bandwidth at this layer to reduce latency.

- Auto-scaling latency is a non-issue because serverless compute resources can also be scaled horizontally with high utilization efficiency.

- General developers can write serverless functions, which offloads to the infrastructure service provider the expensive operational task of enabling auto-scaling of compute resources.

Give serverless technologies a try the next time you have scalability concerns around your API services!

Related posts