Serverless Operations 101

For the last several years serverless application architectures have been rapidly building momentum. Just three short years ago AWS had barely released AWS Lambda and serverless was the realm of technical pioneers. Now most mainstream companies with engineering teams are looking at how to leverage serverless tech to accelerate their teams and increase their bottom line.

The Promise of Serverless

It's easy to see why there's so much enthusiasm from both the technical and business-minded. The central promise of serverless tech is what every software engineer who has deployed production services has dreamed of at some point. The dream is to not think about servers, to focus only on code and functionality, not the details of the infrastructure on which it runs. No long provisioning cycles with IT Ops. No more weeks spent developing configuration management playbooks in Chef, Ansible, Puppet, and the like. Just point your latest code at the cloud and go.

Business people love the serverless promise too. It means faster time to market, lower infrastructure costs, and more staff time creating business value instead of managing infrastructure. Compute is a commodity. The business can focus on the thing that makes it special instead or pouring resources into infrastructure management.

NoOps Nightmares

This central promise is why serverless is revolutionizing how businesses develop software, but as anyone who has run business-critical production services will tell you, it's led some serverless advocates to buy in to certain fallacies and misconceptions. This is where NoOps creeps in, which is the idea that certain architectures (such as serverless) will completely remove the need for modern engineering organizations to do operations. If you're running on a cloud provider's serverless autoscaling function-as-a-service (FaaS) platform, then aren't you outsourcing operations to the cloud provider? Doesn't this mean the team will now be able to fully focus on development and things will run just fine when they're pushed to production?

The NoOps fallacy arises out of a fundamental misunderstanding of why businesses invest in operationalization. NoOps advocates assume operational problems are a product of technical architecture, and that better architectures (like serverless) can eliminate them. This fallacy can seem compelling, since there's a nugget of truth in it. Serverless teams are able to eliminate a wide swath of traditional operations tasks, such as provisioning servers, managing server configuration changes, patching operating systems, etc. However, this misses the larger point. Engineering orgs operationalize due to the criticality of their applications, not due to their technical architecture.

If you're running a business critical application then you need operations. Architectural decisions may make it easier or harder to achieve the operationalization you need, but there's no technology that will remove the need for you to make sure the systems your customers depend on stay up.

If you're not sure why, just think about the six hours Washington state spent without 911 service due to a programming error. Could the same bug have impacted a serverless architecture? Absolutely. The system relied on an internal counter which maxed out in the millions. It's just as easy to write an integer overflow bug and deploy it to Lambda.

Another example is Azure's worldwide meltdown on Feb 29 2012 due to a leap year bug. Could the same class of outage emerge in a serverless architecture? Absolutely. As Microsoft states in the RCA:

The leap day bug is that the GA calculated the valid-to date by simply taking the current date and adding one to its year. That meant that any GA that tried to create a transfer certificate on leap day set a valid-to date of February 29, 2013, an invalid date that caused the certificate creation to fail.

Nothing about FaaS protects you from writing a program which miscalculates dates and cascades into a system wide failure.

Serverless Operations == Better Tools and Automation

The need for operations stems from running valuable systems that people depend on so it follows that serverless teams responsible for business critical functionality need to operationalize. There's a whole list of steps businesses take to fully operationalize engineering teams (a topic for another blog post maybe?), but it all starts with being able to answer two questions.

- "How do I know my software is up and working as expected?"

- "Can we push a fix to production quickly and safely?

Being able to answer the first question means utilizing tools that create visibility into production systems, for example collecting error traces, logs, and performance metrics, and then feeding them back to the team.

Being able to answer the second means utilizing tools that ensure the path to production is predictable, repeatable, and reliable (especially in a crisis) and that you can quickly rollback or roll forward to a known good state.

Serverless makes it so easy to ship new projects and features that it's common for serverless teams to rapidly ship functionality which the business comes to rely on before fleshing out their operations story (or in some cases even recognizing they need one). Combine this with the ephemeral nature of FaaS, where there's no server to ssh to, and things can get pretty hairy in a crisis.

So how does one start to operationalize in the serverless world?

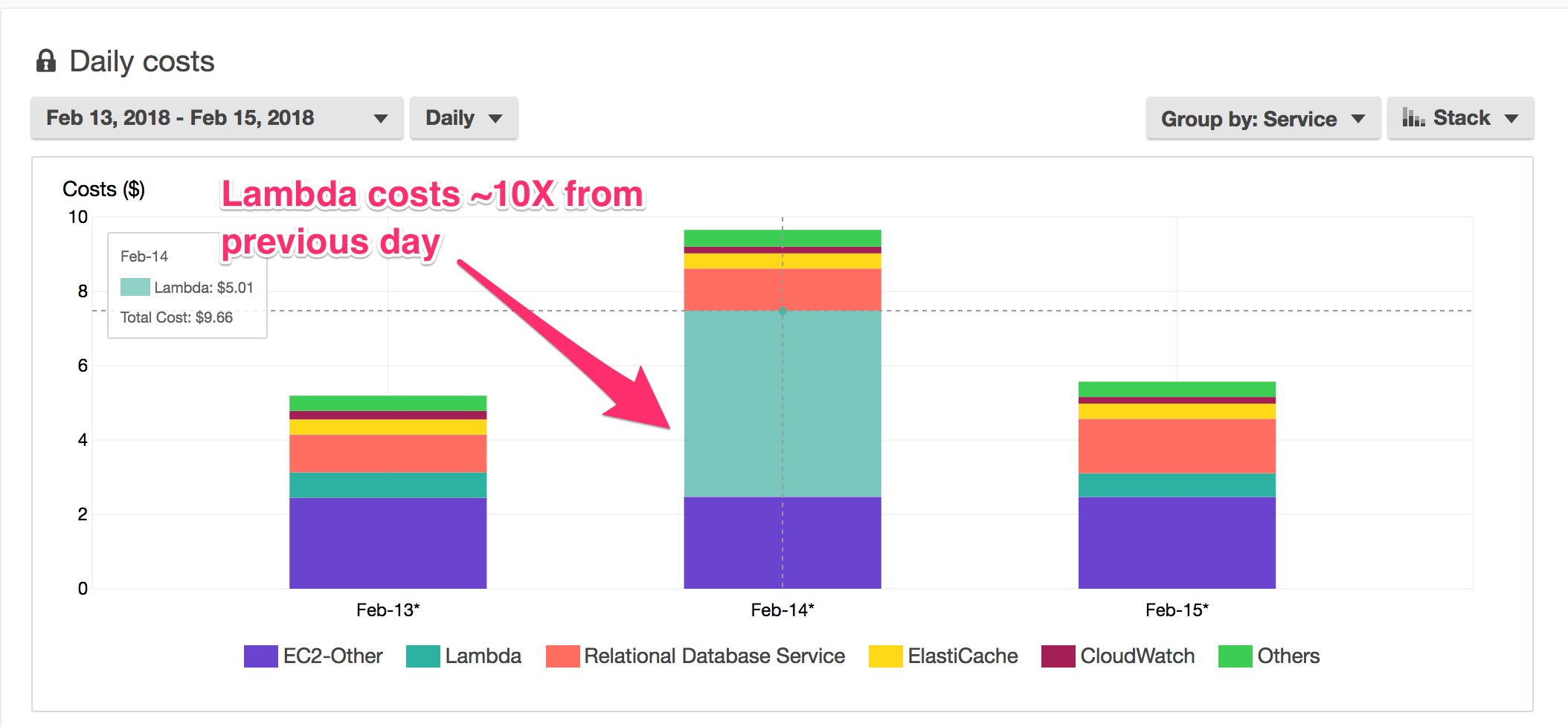

The answer is to look hard at your tools and ensure they address the two questions above. At Stackery we leverage some of the intrinsic benefits of serverless to provide our engineering team (and customers) with cross-cutting visibility into production serverless environments. For example, since all our code runs in Lambda functions, we automatically wrap each function with code that intercepts errors and routes them to a 3rd party error tracking service. Since we do this automatically when we package function dependencies, we get comprehensive error tracking across our serverless infrastructure, every function, every time. The same approach works with logs, which are often critical a critical tool for diagnosing production problems. Packaging and deployment tooling automatically configures resources to forward logs to a centralized logging system so they're there when you need them. Packaging and deployment automation also provides the central point to automatically configure cloudwatch logs, metrics, and alarms across all our functions and resources.

Equally important is considering the tools you'll need to push changes into production. It's common for engineering teams to identify a serious problem in production, quickly identify a fix, and then spend a long period of time running through the manual steps needed to deploy the fix to production. In many cases the best course of action is to rollback to a previously deployed version, but without good tooling and a well defined process this conceptually simple operation can be slow and ad-hoc. In the worst case a manual, multi-step deployment process may lead to mistakes that make the production problem worse. This is the reason that operationalized serverless teams rely on automated continuous-delivery-style deployment pipelines to ensure that they can repeatedly and reliabily push production changes, even in a crisis. At Stackery we apply this model (both internally and for customers) and have developed the tools that allow us to quickly ship complex serverless systems with the push of a button, instead of through a complex, error-prone process.

To Build or Not to Build?

Serverless teams need to operationalize (at least if they're running critical systems), and the foundation of good operations is good tooling. This leads us to the dilemma that many serverless teams are facing today (as well as the many teams considering diving into serverless tech). Serverless promises immense technical and business advantages, but because it is cutting edge technology, traditional operational tooling is difficult or impossible to integrate. Many teams end up pouring tons of time developing their own in-house solutions to these common problems, that undercuts the central promise of serverless, and distracts from their core mission. Each week we talk with serverless teams that have engineers focused full time on configuring IAM rules, creating CloudWatch alarms, and trying to reason about how their production systems are behaving, often with a business person looking over their shoulder saying "I thought this was supposed to be NoOps!" Sure they don't have to worry about servers, but developing one-off systems for packaging, testing, deployment, logging, error tracking, uptime monitoring, etc., is a lot of work and may mean less time to focus on code and core business value instead of more.

The Serverless Future is Bright

The promise of serverless is all about focusing on the core of your software business. This is why serverless architectures rely heavily on 3rd party services and integrations. It's part of the mindset. Why reinvent the wheel? Why spend weeks writing your own authentication service when you can just hook up to AWS Cognito or Auth0 and get back to the real problem you're trying to solve? The same pattern is emerging with regard to operational tooling. As more and more companies recognize the benefits of serverless they are becoming increasingly reliant on these serverless applications. As serverless engineers we're increasingly asking ourselves: why spend weeks (or months) writing our own operational tooling when we can just hook up production grade tooling (like Stackery) and get back to the real problem we're trying to solve? Make sure your serverless team is thinking through operational concerns like production visiblity and repeatable deployments (ideally before major problems hit) and that you have a production grade tooling in place to protect you from falling victim to the NoOps nightmare.